PRIN RexLearn project

Reliable and Explainable Adversarial Machine Learning

Project coordinator

Prof. Fabio Roli

PRA Lab – University of Cagliari

Duration

36 Months

August 2019 – August 2023

Budget

Total budget: 914640 €

MUR funded budget: 738000 €

Partners

University of Cagliari

University of Venice “Ca’ Foscari”

University of Florence

University of Siena

Funding

Ministry of University and Research

PRIN 2017

Project context and challenges

Machine learning has become pervasive. From self-driving cars to smart devices, almost every consumer application now leverages such technologies to make sense of the vast amount of data collected from its users. In some vision tasks, recent deep-learning algorithms have even surpassed human performances. It has thus been extremely surprising to discover that such algorithms can be easily fooled by adversarial examples, i.e., imperceptible, adversarial perturbations to images, text and audio that mislead these systems into perceiving things that are not there. After this phenomenon has been largely echoed by the press, a large number of stakeholders have shown interest in understanding the risks associated to the misuses of machine learning, to develop proper mitigation strategies and incorporate them in their products.

Despite such large interest, this challenging problem is still far from being solved.

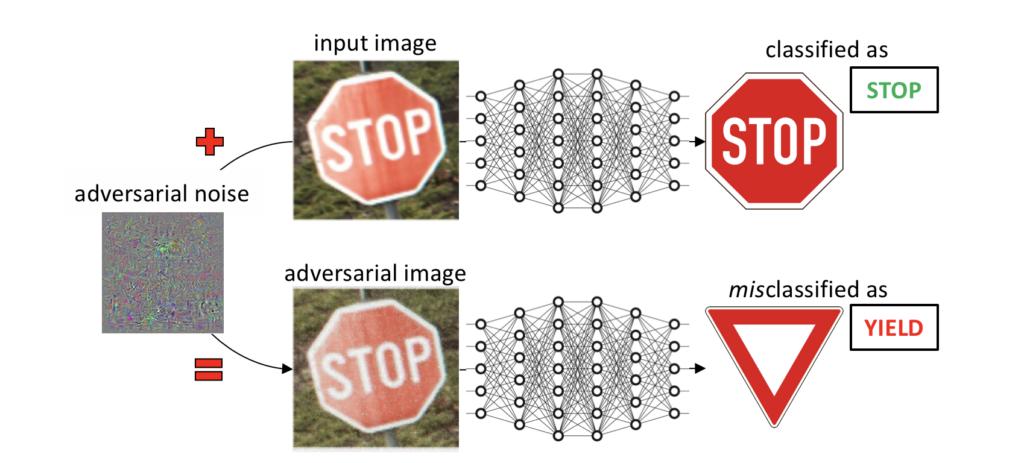

Adversarial manipulation of an input image correctly classified as a stop sign to have it misclassified as a yield sign by a deep neural network. The adversarial noise is magnified for visibility, but remains imperceptible in the resulting adversarial image.

Although earlier research findings in the area of adversarial machine learning have shown the existence of such adversarial examples against traditional machine-learning algorithms (Barreno et al., 2010; Biggio et al., 2012, 2013, 2014), the phenomenon has been largely echoed by the press only recently, after that Szegedy et al. (2014) have shown its impact on deep networks (Castelvecchi, 2016).

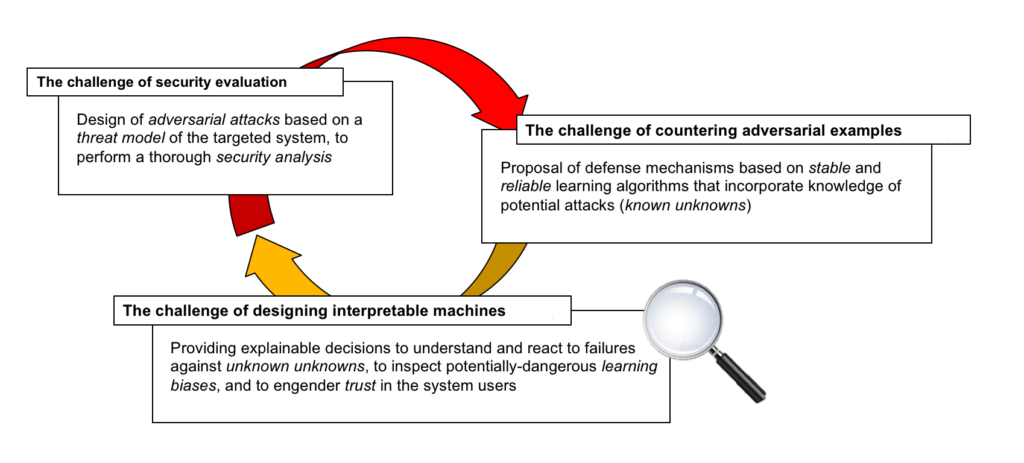

In this project, we posed three main challenges that are hindering current progress towards the development of secure machine-learning technologies, and advocate the use of novel methodological approaches to tackle them.

1. The challenge of security evaluation

2. The challenge of countering adversarial examples

3. The challenge of designing interpretable machines

These problems have been addressed by the development of appropriate scientific methodologies allowing a significant advancement of the state of the art.

The first methodological advancement concerned the development of techniques for evaluating the robustness/safety of machine learning algorithms with respect to inputs disrupted to compromise their decisions, known as adversarial examples.

The second has concerned the development of techniques for improving the robustness of algorithms against these inputs.

The third scientific advance involved the development of techniques for improvement the interpretability of the decisions provided by these algorithms.

A large collection of datasets for the experimentation on use cases was performed, followed by the development and testing of prototype systems on applications of interest, which included image recognition and automatic detection of computer viruses.

Project results and impact

The project produced significant tangible results, including: (i) a thesis of doctorate and more than 50 scientific publications, 19 of which published in international journals and others in the proceedings of conferences among the most important in the field of machine learning and artificial intelligence; (ii) the release of benchmark datasets and associated experimental prototypes, through the production of various projects with open code source; (iii) an intense dissemination and communication activity towards stakeholders and the scientific community.

The project has also fostered numerous scientific collaborations at national and international level, such as demonstrated by the number of publications with co-authors external to the project unit team. This led to the consolidation and formalization of agreements for carrying out some joint activities with companies and international institutions.

Publications on Scientific Journals

- M. Pintor, D. Angioni, A. Sotgiu, L. Demetrio, A. Demontis, B. Biggio, and F. Roli. ImageNet-Patch: A dataset for benchmarking machine learning robustness against adversarial patches. Pattern Recognition, 134:109064, 2023.

- A. E. Cinà, K. Grosse, A. Demontis, B. Biggio, F. Roli, and M. Pelillo. Machine learning security against data poisoning: Are we there yet? IEEE Computer (2024), in press.

- Y. Zheng, L. Demetrio, A. E. Cinà, X. Feng, Z. Xia, X. Jiang, A. Demontis, B. Biggio, and F. Roli. Hardening RGB-D object recognition systems against adversarial patch attacks. Information Sciences, 651:119701, 2023.

- Y. Mirsky, A. Demontis, J. Kotak, R. Shankar, D. Gelei, L. Yang, X. Zhang, M. Pintor, W. Lee, Y. Elovici, and B. Biggio. The threat of offensive AI to organizations. Comput. Secur., 124:103006, 2023.

- Y. Zheng, X. Feng, Z. Xia, X. Jiang, A. Demontis, M. Pintor, B. Biggio, and F. Roli. Why adversarial reprogramming works, when it fails, and how to tell the difference. Information Sciences, 632:130–143, 2023.

- F. Crecchi, M. Melis, A. Sotgiu, D. Bacciu, and B. Biggio. FADER: Fast adversarial example rejection. Neurocomputing, 470:257–268, 2022.

- M. Melis, M. Scalas, A. Demontis, D. Maiorca, B. Biggio, G. Giacinto, and F. Roli. Do gradient-based explanations tell anything about adversarial robustness to android malware? Int’l Journal of Machine Learning and Cybernetics, 13(1):217–232, 2022.

- D. Maiorca, A. Demontis, B. Biggio, F. Roli, and G. Giacinto. Adversarial detection of flash malware: Limitations and open issues. Comput. Secur., 96:101901, 2020.

- A. Sotgiu, A. Demontis, M. Melis, B. Biggio, G. Fumera, X. Feng, and F. Roli. Deep neural rejection against adversarial examples. EURASIP J. Information Security, 2020(5), 2020.

- L. Demetrio, B. Biggio, G. Lagorio, F. Roli, and A. Armando. Functionality-preserving black-box optimization of adversarial windows malware. IEEE Transactions on Information Forensics and Security, 16:3469–3478, 2021.

- L. Demetrio, S. E. Coull, B. Biggio, G. Lagorio, A. Armando, and F. Roli. Adversarial EXEmples: A survey and experimental evaluation of practical attacks on machine learning for Windows malware detection. ACM Trans. Priv. Secur., 24(4), September 2021.

- A. E. Cinà, K. Grosse, A. Demontis, S. Vascon, W. Zellinger, B. A. Moser, A. Oprea, B. Biggio, M. Pelillo, and F. Roli. Wild patterns reloaded: A survey of machine learning security against training data poisoning. ACM Comp. Surv., 55(13s):294:1–294:39, 2023.

- A. E. Cinà, A. Torcinovich, and M. Pelillo. A black-box adversarial attack for poisoning clustering. Pattern Recognition, 122:108306, 2022.

- M. Pintor, L. Demetrio, A. Sotgiu, M. Melis, A. Demontis, and B. Biggio. secml: Secure and explainable machine learning in python. SoftwareX, 18:101095, 2022.

- G. Ciravegna, P. Barbiero, F. Giannini, M. Gori, P. Liò, M. Maggini, S. Melacci: Logic Explained Networks. Artificial Intelligence 314: 103822, Elsevier, 2023.

- S. Melacci, G. Ciravegna, Angelo Sotgiu, Ambra Demontis, Battista Biggio, M. Gori, Fabio Roli: Domain Knowledge Alleviates Adversarial Attacks in Multi-Label Classifiers. IEEE Trans. Pattern Anal. Mach. Intell. 44(12): 9944-9959, 2022.

- M. Tiezzi, G. Marra, S. Melacci, M. Maggini: Deep Constraint-Based Propagation in Graph Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 44(2): 727-739, 2022.

- A. Betti, G. Boccignone, L. Faggi, M. Gori, S. Melacci: Visual Features and Their Own Optical Flow. Frontiers Artif. Intell. 4: 768516, 2021.

- A. Betti, M. Gori, S. Melacci: Learning visual features under motion invariance. Neural

Networks 126: 275-299, 2020.

Book chapters

20. G. Ciravegna, F. Giannini, P. Barbiero, M. Gori, P. Lio, M. Maggini, S. Melacci. Learning Logic Explanations by Neural Networks, Compendium of Neurosymbolic Artificial Intelligence (Ch. 25), 547-558, IOS Press, 2023.

Conference Proceedings

- D. Lazzaro, A. E. Cina, M. Pintor, A. Demontis, B. Biggio, F. Roli, and M. Pelillo. Minimizing energy consumption of deep learning models by energy-aware training. ICIAP 2023, pp. 515–526, Cham, 2023. Springer Nature Switzerland.

- G. Floris, R. Mura, L. Scionis, G. Piras, M. Pintor, A. Demontis, and B. Biggio. Improving fast minimum-norm attacks with hyperparameter optimization. ESANN, 2023.

- M. Pintor, L. Demetrio, A. Sotgiu, A. Demontis, N. Carlini, B. Biggio, and F. Roli. Indicators of attack failure: Debugging and improving optimization of adversarial examples. NeurIPS, 2022.

- Pintor, M., Demetrio, L., Manca, G., Biggio, B. and Roli, F., 2021. Slope: A First-order Approach for Measuring Gradient Obfuscation. ESANN 2021.

- M. Pintor, F. Roli, W. Brendel, and B. Biggio. Fast minimum-norm adversarial attacks through adaptive norm constraints. NeurIPS, 2021.

- M. Kravchik, B. Biggio, and A. Shabtai. Poisoning attacks on cyber attack detectors for industrial control systems. 36th Annual ACM Symp. Applied Computing, SAC ’21, pp. 116–125, New York, NY, USA, 2021. ACM.

- M. Pintor, L. Demetrio, A. Sotgiu, H.-Y. Lin, C. Fang, A. Demontis, and B. Biggio. Detecting attacks against deep reinforcement learning for autonomous driving. Int’l Conf. on Machine Learning and Cybernetics. IEEE SMC, 2023.

- G. Piras, M. Pintor, A. Demontis, and B. Biggio. Samples on thin ice: Re-evaluating adversarial pruning of neural networks. Int’l Conf. on Machine Learning and Cybernetics. IEEE SMC, 2023.

- A. E. Cinà, S. Vascon, A. Demontis, B. Biggio, F. Roli, and M. Pelillo. The hammer and the nut: Is bilevel optimization really needed to poison linear classifiers? In IJCNN, pp. 1–8, Shenzhen, China, 2021. IEEE.

- G. Pellegrini, A. Tibo, P. Frasconi, A. Passerini, and M. Jaeger. Learning aggregation functions. In Z.-H. Zhou, editor, IJCAI-21, pp. 2892–2898. IJCAI, 8 2021.

- S. Marullo, M. Tiezzi, M. Gori, S. Melacci, T. Tuytelaars: Continual Learning with Pretrained Backbones by Tuning in the Input Space. IEEE IJCNN 2023: 1-9 S. Marullo, M. Tiezzi, M. Gori, S. Melacci: Being Friends Instead of Adversaries: Deep Networks Learn from Data Simplified by Other Networks. AAAI Conf. on Artificial Intelligence 2022: 7728-7735.

- S. Marullo, M. Tiezzi, A. Betti, L. Faggi, E. Meloni, S. Melacci: Continual Unsupervised Learning for Optical Flow Estimation with Deep Networks. Conf. on Lifelong Learning Agents (CoLLAs) 2022: 183-200.

- A. Betti, L. Faggi, M. Gori, M. Tiezzi, S. Marullo, E. Meloni, S. Melacci: Continual Learning through Hamilton Equations. Conf. on Lifelong Learning Agents (CoLLAs) 2022: 201-212.

- E. Meloni, L. Faggi, S. Marullo, A. Betti, M. Tiezzi, M. Gori, S. Melacci: PARTIME: Scalable and Parallel Processing Over Time with Deep Neural Networks. IEEE ICMLA 2022: 665-670.

- M. Tiezzi, S. Marullo, L. Faggi, E. Meloni, A. Betti, S. Melacci: Stochastic Coherence Over Attention Trajectory For Continuous Learning In Video Streams. IJCAI 2022: 3480-3486.

- M. Tiezzi, S. Marullo, A. Betti, E. Meloni, L. Faggi, M. Gori, S. Melacci: Foveated Neural Computation. ECML/PKDD (3) 2022: 19-35.

- E. Meloni, M. Tiezzi, L. Pasqualini, M. Gori, S. Melacci: Messing Up 3D Virtual Environments: Transferable Adversarial 3D Objects. IEEE Int’l Conf. on Machine Learning and Applications (ICMLA) 2021: 1-8.

- E. Meloni, M. Tiezzi, L. Pasqualini, M. Gori, S. Melacci: Messing Up 3D Virtual Environments: Transferable Adversarial 3D Objects. IEEE Int’l Conf. on Machine Learning and Applications (ICMLA) 2021: 1-8.

- S. Marullo, M. Tiezzi, M. Gori, S. Melacci: Friendly Training: Neural Networks Can Adapt Data To Make Learning Easier. IEEE IJCNN 2021: 1-8.

- G. Ciravegna, F. Giannini, S. Melacci, M. Maggini, M. Gori: A Constraint-Based Approach to Learning and Explanation. AAAI Conf. on Artificial Intelligence 2020: 3658-3665.

- M. Tiezzi, G. Marra, S. Melacci, M. Maggini, M. Gori: A Lagrangian Approach to Information Propagation in Graph Neural Networks. ECAI 2020: 1539-1546.

- E. Meloni, L. Pasqualini, M. Tiezzi, M. Gori, S. Melacci: SAILenv: Learning in Virtual Visual Environments Made Simple. Int’l Conf. on Pattern Recognition (ICPR) 2020: 8906-8913.

- G. Ciravegna, F. Giannini, M. Gori, M. Maggini, S. Melacci: Human-Driven FOL Explanations of Deep Learning. IJCAI 2020: 2234-2240

- A. Betti, M. Gori, S. Marullo, S. Melacci: Developing Constrained Neural Units Over Time. IEEE IJCNN 2020: 1-8.

- G. Marra, M. Tiezzi, S. Melacci, A. Betti, M. Maggini, M. Gori: Local Propagation in Constraint-based Neural Networks. IEEE IJCNN 2020: 1-8

- M. Tiezzi, S. Melacci, A. Betti, M. Maggini, M. Gori: Focus of Attention Improves Information Transfer in Visual Features. NeurIPS, 2020.

- A. Betti, M. Gori, S. Melacci, M. Pelillo, F. Roli: Can machines learn to see without visual databases? In Int’l Workshop Data Centric AI at the Thirty-Fifth NeurIPS, 2021.

- E. Meloni, A. Betti, L. Faggi, S. Marullo, M. Tiezzi, S. Melacci: Evaluating Continual Learning Algorithms by Generating 3D Virtual Environments. Int’l W. CSSL at IJCAI 2021: 62-74.

- M. Tiezzi, G. Marra, S. Melacci, M. Maggini, M. Gori: Lagrangian Propagation Graph Neural Networks. In The First Int’l Workshop on Deep Learning on Graphs: Methodologies and Applications (jointly with AAAI Conf. on Artificial Intelligence 2020), DLGMA 2020.

- D. Angioni, L. Demetrio, M. Pintor, and B. Biggio. Robust machine learning for malware detection over time. In ITASEC 2022, vol. 3260 of CEUR-WS, pp. 169–180, 2022.

- G. Piras, M. Pintor, L. Demetrio, and B. Biggio. Explaining machine learning DGA detectors from dns traffic data. In ITASEC 2022, vol. 3260 of CEUR-WS, pp. 150–168, 2022.

Papers under review

- A. E. Cinà, A. Demontis, B. Biggio, F. Roli, and M. Pelillo. Energy-latency attacks via sponge poisoning. arXiv:2203.08147, 2022.

- A. E. Cinà, K. Grosse, S. Vascon, A. Demontis, B. Biggio, F. Roli, and M. Pelillo. Backdoor learning curves: Explaining backdoor poisoning beyond influence functions. arXiv:2106.07214, 2021.

Phd thesis

Marco Melis, Explaining vulnerabilities of machine learning to adversarial attacks, PhD

Thesis, PhD Program in Electronic and Computer Engineering (DRIEI, University of Cagliari,

Italy, 2021. Supervisors: Fabio Roli, Battista Biggio.

Prototypes

Toucanstrike and SecML-Malware.

Toucanstrike: a command line tool for launching attacks against Machine Learning Malware detectors. Based on SecML Malware, it allows to test it in “red-teaming” mode the vulnerability/robustness of Windows malware detection algorithms against attacks developed in the context of the RexLearn project [10,11].

SecML.

SecML is the library developed in the context of the RexLearn project [14] which includes all the attack algorithms developed during the project to test the robustness of machine learning algorithms against adversarial examples, and poisoning attacks (contamination attacks against training data). This library is currently used in other national and European projects, including the ELSA project, and provides the basis for the implementation of plugins and other libraries (such as SecML Malware) to extend robustness testing to more complex domains (such as binary or source code manipulation done in SecML Malware). In the context of the RexLearn project, SecML also allowed the prototyping of the platform to perform robustness/security testing of the visual system of the iCub humanoid robot, tested against the presence of “patch” type attacks [1].

Open source software

Open source software has been developed to support the testing of the main activities described in the previous publications the project. The software is useful for reproducing the experiments carried out and it worsk also as a starting point for future developments.

The tools/libraries developed in the project have all been released in open source mode and are described below reporting the title/link of the tool and the reference article, number of lines of code (if available).

- Foveated Neural Computation [37], 7195 code lines

- Stochastic Coherence Over Attention Trajectory For Continuous Learning In Video Streams [36], 11872

- PARTIME: Scalable and Parallel Processing Over Time with Deep Neural Networks [35], 1300

- Focus of Attention Improves Information Transfer in Visual Features [46], 9277

- Continual Unsupervised Learning for Optical Flow Estimation with Deep Networks [33], 9673

- Friendly Training [32], 1895

- Lagrangian Propagation in Graph Neural Networks [17], 1422

- Messing Up 3D Virtual Environments: Transferable Adversarial 3D Objects [38], 2154

- SAILenv: Learning in Virtual Visual Environments Made Simple [42], 3016

- Logic Explained Networks [15], 10233

- Sponge poisoning [50], 4796

- Backdoor learning curves [51], 8662

- Beta Poisoning [29], 1356

- Poisoning clustering [13], 4961

- ImageNet-Patch (code & dataset) [1], 2917

- SecML [14], 61188

- SecML Malware [10,11], 4516

- Indicators of Attack Failure [23], 4381

- Fast minimum [25], 1147